Biological analogy

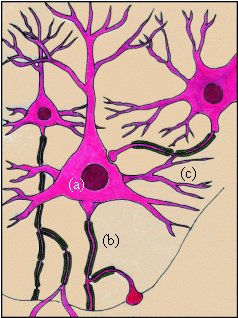

Artificial Neural Networks (ANN) form a

family of inductive techniques that mimic

biological information processing. Most animals

possess a neural system that processes

information. Biological neural systems consist of

neurones, which form a network through synaptic

connections between axons and dendrites. With

these connections, neurones exchange

chemo-electrical signals to establish behaviour.

Neural can adapt their behaviour by changing the

strength of these connections; more complex

learning behaviour can occur by forming new

connections. Changes in connections, and new

connections, grow under influence of the signal

exchange between neurones.

|

[click to enlarge]

|

|

|

Figure 7.

Biological neural tissue.

Neural cells (a), axons (b) and dendrites

(c) are visible. Where axons touch

dendrites or nuclei, synaptic contacts

are formed.

|

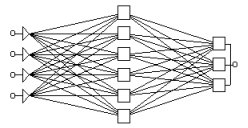

Figure 8.

Layered artificial neural network.

On the left, a pattern is coded.

The activation rule propagates the

pattern to the right side through the

central layer, using the weighted

connections. From here, the output can be

read. Based on the input-output relation,

the learning rule may update the weights

|

Artificial implementation

Artificial neural networks are simplified models of

the biological counterpart. They consist of the

following elements:

- Processing

units (models of neurones)

- Weighted

interconnections (models of neural

connections)

- An activation

rule, to propagate signals through the

network (a model of the signal exchange

in biological neurones)

- A learning

rule (optional), specifying how weights

are adjusted on the basis of the

established behaviour

The most common

ANN are structured in layers (see Figure

8), with an input layer where data is

coded as a numeral pattern, one or more hidden

layers to store intermediate results, and an

output layer that contains the output result of

the network.

Neural Network

Model Representation

In contrast to

symbolic techniques such as decision trees

(see link above) the representation of

knowledge in a neural network is not easy to

comprehend. The set of weights in a network,

combined with input and output coding, realises

the functional behaviour of the network. As

illustrated in Figure 3, such data does not give

humans an understanding of the learned model.

Some alternative

approaches for representing a neural network are:

- Numeric:

show the weight matrix; not very

informative, but illustrates why a neural

network is called sub-symbolic: the

knowledge is not represented explicitly,

but contained in a distributed

representation over many weight factors.

- Performance:

show that a neural network performs good

on the concepts in the data set, even

better than other techniques, and do not

try to interpret de model

- Extract

knowledge: techniques exist to

extract the model captured in a neural

network, e.g. by running a symbolic

technique on the same data or by

transferring a NN model into an

understandable form. Techniques exist to

extract statistical models and symbolic

models from NN.

|